Based on my post -

LMS Satisfaction Features and Barriers - I received some feedback that the numbers didn't correspond to what other research has shown. As a case in point, in my post I said:

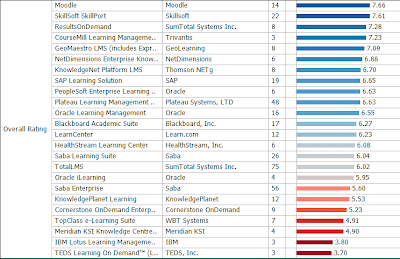

To me, it's interesting to see news like CornerStone OnDemand Raises $32M - Sept. 17 2007 - when their satisfaction score is being reported so low. That seems like a disconnect. I know the folks from CornerStone and their product does some really interesting things. They are moving towards a broader suite that focuses on other aspects of Talent Management. But given the number of respondents who've rated them low on satisfaction, it would give me pause during a selection process (so that makes me worried about sales), it also would have worried me as a potential investor.

It was pointed out to me that Cornerstone has been rated much higher by other researchers, in particular,

Bersin. Looking at Cornerstone's site:

“Cornerstone’s customers are not only satisfied with their solutions, but also recognize that Cornerstone can grow with their business, as evidenced by their leading loyalty ranking.”

Josh Bersin, Bersin & Associates

“For years, Cornerstone has provided an excellent integrated talent management solution for performance, learning, and succession management. Cornerstone's customers - among some of the world's largest enterprises - tell us that Cornerstone OnDemand's solution is highly configurable and flexible, a key strength in the performance management market… Cornerstone's 'new breed' of integrated talent management is not only in demand among its customer base, but is also catching on across the industry.”

Josh Bersin, Bersin & Associates

Further, according to a PDF sent to me it appears that Bersin's research rated Cornerstone as having market leading customer satisfaction and customer loyalty numbers.

So which research is valid? What should you believe? Are customers satisfied with Cornerstone or are they not satisfied? And why the discrepancy?

How Research is Conducted

So let's start with how Bersin and the eLearningGuild gather their data. The eLearningGuild asks industry experts to formulate survey questions that are then sent to their members. Members are asked to fill out these surveys via emails. The membership is pretty large so on the LMS survey, you get roughly 1300 respondents, but with some overlap over companies that are combined to form a single response. In the case of Cornerstone, there were 9 companies who are corporations with 1,000 learners, 1,000 employees represented in the rating. There were a total of 20 different people rating Cornerstone representing roughly 18 organizations.

I'm less familiar with the details of Bersin's approach. What they say on their web site is:

Our patent-pending methodology relies on primary research directly to corporate users, vendors, consultants and industry leaders. We regularly interview training and HR managers, conduct large surveys, meet with managers and executives, and seek-out new sources of information. We develop detailed case studies every month. We have the industry's largest database of statistics, financials, trends, and best practices in corporate training (more than 1/2 million data elements).

Normally what this means is that each company who is going to be in the survey will be asked to provide a list of customers who can be interviewed and respond to the survey. However, it's not clear from their description what the source of the interviews and who the "large surveys" go to.

So the eLearningGuild gets surveys based on asking a large audience and receiving somewhat random inputs based on who responds. Bersin takes a directed research approach likely based on lists provided by the customers.

ImpactObviously, these two approaches are likely going to have different results.

Bersin's approach is much more likely to be similar to what you would find when you check references during a selection process. The vendor is providing the names of customers. You would expect these customers to be happy with the product. If they aren't, then you should be pretty worried. Bersin research also provides more information about where the vendors are focused as a product, where they position themselves in the market and other interesting information. In my opinion,

in terms of evaluating real satisfaction, Bersin's numbers are highly suspect.

The eLearningGuild approach is going to get a much more diverse set of inputs and is much more likely to find people who are not satisfied with the product. If the eLearningGuild can reach a large enough set of customers of the product and you get a random sample of those customers, then I would tend to believe those numbers over the numbers produced by Bersin.

But the big "if" there was whether you reach enough customers. The eLearningGuild received roughly 1,300 respondents. The problem is that once you go beyond the market leaders, the number of respondents on smaller vendors becomes small. Only 3 companies rated TEDS and they rated it really low. I'm not sure I'd believe that is representative of all customers.

So, if you are looking at a relatively niche product, then the eLearningGuild is not likely to produce meaningful numbers. On the other hand, if you are considering TEDS, the eLearningGuild has found 3 customers are not happy with the product, and that's good to know.

In the case of Cornerstone, despite having glowing reviews from Bersin, there are some customers who are not satisfied with the product. As I said before, that would give me pause during a selection process and would cause me to ask:

- Why are the 9 customers rating Cornerstone lower?

- Was it a feature / needs mismatch?

- Were they having trouble with features?

- Are the dissatisfiers things that I would care about?

- How could I find out?

The last question is probably the most important question. And right now, the unfortunate problem is that it may be relatively hard to find out. The eLearningGuild makes the aggregate findings available, but there's no ability to drill down to find out specific reasons nor do they provide some kind of social networking to get to those respondents. Note: Steve Wexler (head of the guild's research group) and I have discussed how they could do this, but it wouldn't be easy.

So, it's on us to figure it out on our own. And we are describing what you do during the reference checks that occur towards the tail end of the selection process. This takes some work, so it's definitely not something you should do across more than a couple of vendors.

I would use

LinkedIn to find people who've been using the product at various places, especially at companies similar to your own. I would also use the vendor's client list (not their reference list) and call to ask or again use LinkedIn to network into those clients to find the right people to talk to. Most people are very happy to give their experience. Especially if you are about to select the LMS. However, don't be surprised if you find people who are still in process or otherwise can't be all that helpful. So, it takes some work.

I welcome any thoughts and feedback on this.

Also, I obviously highly recommend using LinkedIn as a means of professional social networking. If you are a LinkedIn user, consider connecting with

me.